Modern science is advancing at a pace that would have seemed impossible just a few decades ago. Researchers now attempt to model entire climate systems, simulate nuclear reactions, and analyze biological data at a molecular level using computing power once reserved for a supercomputer.

These efforts push far beyond what everyday machines were designed to handle. Even today’s fastest laptops and enterprise servers quickly reach their limits when faced with problems involving massive calculations or continuous data flow.

This growing gap between ambition and capability has led scientists to rely on a supercomputer to explore questions that standard machines simply cannot approach. Traditional computers excel at personal productivity and routine business tasks, but they struggle when computations must be performed simultaneously on an enormous scale.

As scientific questions become more complex, the need for powerful computing systems capable of handling extreme workloads becomes unavoidable. This is where large-scale data processing changes the rules, allowing researchers to test ideas digitally before moving to the physical world.

In this article, you will learn what makes these machines fundamentally different, how they evolved, and why they remain essential in modern research. By the end, you’ll have a clear understanding of what a supercomputer does, how it works, and why its role continues to expand.

What Is a Supercomputer?

At its simplest, this type of system is designed to perform calculations at a scale far beyond what conventional computers can handle.

Simple Definition for General Readers

At its core, a supercomputer is a machine built to perform calculations at extraordinary speed. Unlike ordinary computers that process tasks one at a time or in small batches, these systems are designed to handle millions of operations simultaneously. The goal is not intelligence or decision-making ability, but raw computational capacity.

This distinction matters. A faster computer does not “think better”; it simply processes numbers more quickly. When people hear the term, they often imagine artificial intelligence, but the reality is far more practical. The supercomputer definition focuses on performance — specifically, the ability to execute complex calculations across thousands or even millions of processing units at once.

Rather than running typical software applications, these machines are optimized for mathematical workloads such as modeling, simulation, and analysis. They rely on advanced computing technology that prioritizes coordination and speed over versatility.

Why Supercomputers Exist

The reason a supercomputer exists is straightforward: some problems are too large for normal computers to solve within a reasonable time. Weather forecasting, for example, requires analyzing constantly changing atmospheric data across the entire planet.

Drug discovery involves modeling interactions between countless molecular structures. These challenges demand computing power that scales far beyond consumer hardware.

In scientific research, time is often as important as accuracy. Calculations that would take years on a desktop can be completed in hours when distributed properly. This ability allows researchers to test theories, refine predictions, and respond faster to real-world risks. Without such systems, many modern scientific breakthroughs would remain purely theoretical.

A Brief History of Supercomputers

The origins of this technology can be traced to a time when computational speed became a strategic necessity.

Early Supercomputing Era

The origins of the supercomputer date back to the Cold War era, when governments required machines capable of performing advanced scientific and military calculations. Early systems were custom-built, extremely expensive, and limited to national laboratories. Their primary purposes included nuclear research, aerospace engineering, and cryptographic analysis.

These early machines relied on single processors pushed to their physical limits. Engineers focused on maximizing clock speed and memory access, laying the foundation for what would later become the history of supercomputers as a distinct field within computing.

Evolution Toward Modern Systems

As hardware technology matured, designers realized that increasing speed alone was no longer efficient. The next major breakthrough came from connecting many processors together. Instead of one extremely fast unit, systems began using clusters of smaller processors working in coordination.

This shift marked the evolution of computing systems toward parallel architectures. Tasks could now be divided into smaller pieces and processed simultaneously. Over time, this approach became dominant, enabling massive scalability and improved reliability. Today’s systems reflect decades of refinement built on this fundamental idea, forming the backbone of modern large-scale scientific computing.

Key Characteristics of Supercomputers

Several defining features separate these systems from traditional computers, shaping how they are built, operated, and used.

Massive Parallel Processing

One defining trait of a supercomputer is its use of massive parallel processing. Instead of relying on a single powerful processor, these systems employ thousands or even millions of cores. Each core performs a portion of the workload at the same time, dramatically reducing total computation time.

This structure allows problems to be broken down into manageable segments. When designed correctly, parallel execution enables calculations that would otherwise be impossible within practical timeframes.

Extremely High Computational Power

Performance in these machines is measured in enormous numerical operations per second. Rather than focusing on everyday speed metrics, engineers evaluate how efficiently the system performs large-scale mathematical tasks. This level of output supports advanced modeling, real-time simulations, and continuous data analysis across vast datasets.

Such capacity is the hallmark of high-performance computing, where precision and throughput matter more than user interaction or graphical output.

Specialized Infrastructure

Operating a supercomputer requires more than hardware alone. These systems demand dedicated facilities designed to support their physical and electrical needs. Advanced cooling systems are essential, as thousands of processors generate intense heat during operation. Power delivery must remain stable and uninterrupted, often rivaling the consumption of small towns.

Because of these requirements, facilities are purpose-built to maintain reliability and safety. These features collectively define the characteristics of supercomputers and explain why they exist primarily within research institutions rather than commercial offices.

How Supercomputers Work

A supercomputer does not operate as a single powerful machine. Instead, it relies on many smaller components working together in a highly coordinated way. This structure allows complex problems to be divided into manageable parts, processed simultaneously, and then combined into meaningful results.

Parallel Computing Explained Simply

The core idea behind modern high-end computing is parallel computing. Rather than completing one task before starting another, the workload is divided into thousands of smaller pieces that can be handled at the same time.

A simple analogy is a large construction project. One worker building an entire structure alone would take years. A coordinated team, each responsible for a specific task, can finish the same project far more quickly. In computing terms, each processor focuses on a small portion of the overall calculation, dramatically reducing the total time required.

This approach is especially effective for scientific simulations, where millions of calculations must occur simultaneously. By spreading the workload across many processors, a supercomputer can analyze complex systems that would overwhelm traditional machines.

Coordination Between Processors

Splitting tasks is only part of the process. What truly defines large-scale systems is how well those parts communicate. Each processor operates as a node within a broader network, constantly exchanging data with others to ensure accuracy and consistency.

These nodes are connected through extremely fast interconnects designed to minimize delays. When one processor completes its portion of a calculation, the results are immediately shared so other processors can continue their work. This constant exchange allows the system to function as a unified whole rather than as isolated components.

Such coordination is what distinguishes advanced distributed computing systems from ordinary clusters. Without precise synchronization, calculations could drift apart, leading to errors or inefficiencies that reduce overall performance.

Why This Model Is Efficient

This structure is particularly effective for simulations and modeling because real-world systems rarely operate in isolation. Climate patterns, molecular interactions, and physical forces all influence one another simultaneously. Parallel architectures mirror this reality, allowing digital models to behave more like the systems they represent.

As a result, a supercomputer excels at workloads where accuracy, scale, and speed must exist together, making it indispensable in modern scientific research.

Supercomputer Workflow Overview

| Workflow Stage | What Happens | Purpose |

|---|---|---|

| Problem Breakdown | A large computational task is divided into thousands or millions of smaller units. | Makes complex problems manageable and suitable for parallel execution. |

| Task Distribution | Each processor receives a specific portion of the workload. | Ensures all computing resources are used efficiently. |

| Parallel Processing | Multiple processors perform calculations simultaneously. | Significantly reduces total computation time. |

| Inter-Node Communication | Processors exchange partial results through high-speed interconnects. | Maintains accuracy and synchronization across the system. |

| Data Integration | Results from individual processors are combined into a unified output. | Produces meaningful conclusions from fragmented calculations. |

| Simulation or Modeling Output | The system generates final models, forecasts, or simulations. | Enables analysis of complex real-world systems. |

Examples of Supercomputers

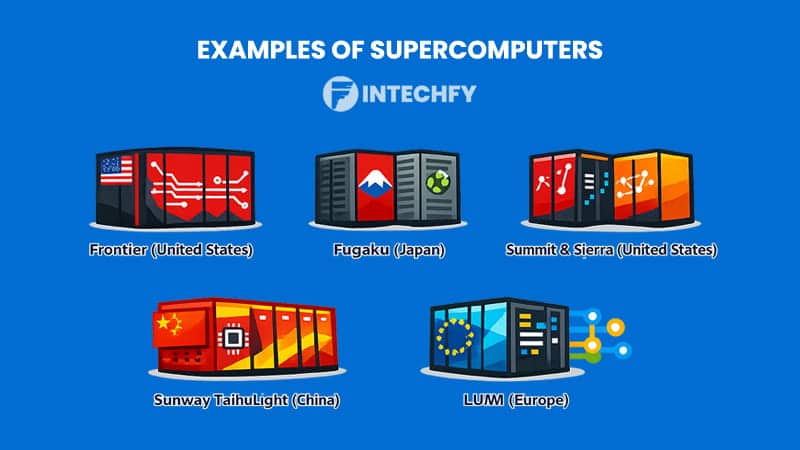

Most large-scale computing systems are not owned by private companies or individuals. They are typically operated by governments and research institutions that require extreme processing power for national laboratories, universities, and scientific collaborations. Over time, several machines have become benchmarks for what advanced computing can achieve.

Frontier (United States)

Frontier, located at Oak Ridge National Laboratory, represents a major milestone in computing history. It was among the first systems to surpass the exascale threshold, meaning it can perform more than one quintillion calculations per second.

This capability allows Frontier to support complex scientific simulations, including climate modeling, materials science, and energy research. Its architecture combines powerful processors with advanced accelerators, enabling researchers to run experiments that previously existed only in theory. Within the global research community, Frontier is often cited among the world’s fastest computing systems.

Fugaku (Japan)

Developed by RIKEN and Fujitsu, Fugaku gained recognition not only for speed but also for balance and efficiency. Unlike many systems that rely heavily on external accelerators, Fugaku uses advanced general-purpose processors optimized for scientific workloads.

This design makes it highly adaptable across different research fields, from disaster prediction to biomedical analysis. During global health studies, Fugaku demonstrated how large-scale computation could accelerate modeling and data evaluation. Its versatility has made it one of the most respected names among famous supercomputers.

Summit and Sierra (United States)

Summit and Sierra were designed with research specialization in mind. Operated by U.S. national laboratories, these systems focus heavily on simulations related to physics, national security, and energy science.

Both machines integrate traditional CPUs with high-performance accelerators, allowing them to process large datasets efficiently. Their architectures helped shape many of the design principles used in newer systems, influencing how modern facilities approach scalability and reliability.

Sunway TaihuLight (China)

Sunway TaihuLight stands out for its use of domestically developed processors. Rather than relying on foreign hardware, its design emphasizes national technological independence.

The system is capable of handling enormous scientific workloads, particularly in engineering and climate research. Its success demonstrated that innovation in system architecture can be just as important as raw processing speed, placing it firmly among globally recognized large-scale machines.

LUMI (Europe)

LUMI serves as Europe’s largest shared research computing platform. Designed to support scientists across multiple countries, it emphasizes collaboration and accessibility within the research community.

Its architecture supports artificial intelligence, climate science, and data-intensive modeling, allowing institutions to share computational resources efficiently. By bringing together regional expertise, LUMI highlights how large-scale systems can function as collective tools rather than isolated assets.

Across these examples, the role of a supercomputer becomes clear. Each system reflects regional priorities, scientific goals, and engineering philosophies, yet all serve the same purpose: enabling discovery at a scale unreachable by conventional machines.

Real-World Applications of Supercomputers

The practical value of advanced computing becomes most visible when it is applied to real-world problems. A supercomputer is rarely built for experimentation alone; it exists to support research that would otherwise be impossible to conduct within reasonable timeframes. Across multiple disciplines, these systems allow scientists to explore scenarios that cannot be tested physically or safely in the real world.

Scientific Research and Simulations

In physics, chemistry, and materials science, scientific simulations play a central role in modern discovery. Researchers can model atomic interactions, test the behavior of new materials, or analyze extreme physical environments without constructing expensive prototypes. These digital experiments reduce risk while accelerating innovation.

Such work is commonly carried out within national laboratories and major research institutions, where large datasets and long-running calculations are routine. A supercomputer enables scientists to adjust variables repeatedly, compare outcomes, and refine theories with a level of precision that traditional computing cannot deliver.

Weather Forecasting and Climate Modeling

Accurate weather prediction depends on analyzing massive volumes of atmospheric data collected from satellites, sensors, and ocean buoys. Climate systems are highly interconnected, meaning small changes in one region can influence conditions elsewhere.

Large-scale computing allows meteorologists to simulate storms, heat waves, and long-term climate patterns in detail. These models improve disaster prediction, giving governments and emergency agencies more time to prepare for floods, hurricanes, or extreme temperatures. The ability to process data continuously makes a supercomputer essential for transforming raw environmental information into actionable forecasts.

Medical and Health Research

In healthcare, advanced computation supports drug discovery, genetic analysis, and disease modeling. Instead of testing thousands of chemical compounds manually, researchers can simulate how molecules interact at a biological level.

This approach shortens development cycles and helps identify promising treatments earlier. During health crises, computational modeling has also been used to study virus transmission and treatment strategies. These applications demonstrate how digital analysis can complement laboratory work rather than replace it.

Industry analysis reflects the growing demand for such capabilities. The global supercomputer market was estimated at around 15.66 billion USD in 2025 and is projected to reach approximately 37.33 billion USD by 2030, with a compound annual growth rate near 18.9 percent between 2025 and 2030, based on the Business Research Company Supercomputers Global Market Report 2026.

This growth highlights how deeply advanced computation has become embedded in scientific and industrial research.

Estimated Cost of a Supercomputer

Building and operating a supercomputer involves far more than purchasing processors. Cost reflects the scale of the system and the environment required to keep it running reliably.

Why These Systems Are So Expensive

Hardware represents only one portion of the total investment. Thousands of processors, vast memory banks, and high-speed networking equipment must function together without failure. Beyond hardware, specialized buildings are often required to support cooling, structural load, and power delivery.

Energy demand also contributes significantly. Continuous operation places heavy strain on electrical infrastructure, while cooling systems must remove large amounts of heat. Skilled engineers are needed to maintain performance, manage updates, and prevent downtime, adding long-term operational expense.

Typical Cost Range

The price of a supercomputer can range from several million dollars for smaller research systems to hundreds of millions for national-scale installations. Costs vary depending on performance goals, energy efficiency, and expected lifespan.

Supercomputer Cost Breakdown

| Cost Factor | Explanation |

|---|---|

| Hardware | Processors, memory, networking |

| Infrastructure | Buildings, cooling, power |

| Energy | Continuous electricity use |

| Maintenance | Skilled engineers and upgrades |

These combined factors explain why such machines remain concentrated in large institutions rather than commercial offices.

Limitations and Challenges of Supercomputers

Despite their capabilities, a supercomputer is not without significant constraints. These limitations influence how and where such systems can be deployed.

Energy Consumption Issues

One of the most pressing challenges is energy consumption. Large facilities require enormous amounts of electricity to operate continuously. Visual data from the Global Innovation Index 2025 published by WIPO indicates that some installations consume power comparable to tens of thousands of households each year.

This demand raises concerns about sustainability, operational cost, and environmental impact. As performance increases, balancing speed with efficiency becomes an ongoing challenge.

Cooling and Physical Constraints

High computational density produces intense heat. Advanced cooling systems are required to prevent component failure, often involving liquid cooling or specialized airflow designs. Physical space, structural support, and noise management also become critical factors in system design.

Limited Accessibility

Access remains another major limitation. These machines are not available to individuals or small organizations. Usage is typically restricted to governments, universities, and major research institutions, often through scheduled allocations. As a result, advanced computing power remains a shared and carefully managed resource.

Together, these factors illustrate the broader limitations of supercomputers, showing that extraordinary performance comes with equally significant operational demands.

Supercomputer vs Other Computing Systems

Computers can be classified in many ways depending on their purpose, scale, and design. Some are built for personal use, others for enterprise operations, and a few for extreme scientific workloads. Within this spectrum, a supercomputer occupies the highest tier, not because it replaces other systems, but because it serves a fundamentally different role.

To better understand where it fits, the comparison below highlights how major computing systems differ in function and use:

- Mainframe computer: Designed for reliability and continuous operation, mainframes are commonly used by banks, airlines, and government agencies. Their primary strength lies in handling massive volumes of transactions rather than performing complex scientific calculations.

- Minicomputer: Once widely used in mid-sized organizations, minicomputers acted as shared systems before modern servers became dominant. Today, they are largely considered transitional technology in the evolution of enterprise computing.

- Microcomputer: This category includes desktops and laptops intended for individual users. While modern microcomputers are increasingly powerful, they prioritize flexibility and everyday productivity over large-scale computation.

- Server Computer: Servers form the backbone of enterprise infrastructure, managing databases, applications, and cloud platforms. They are optimized for multi-user access and reliability, not for intensive scientific modeling.

- Workstations Computer: Often used by engineers and designers, workstations deliver higher performance than typical personal computers. However, even advanced models remain limited when tasks require massive parallel execution.

- Embedded Computer: Found inside vehicles, appliances, and industrial equipment, embedded systems perform highly specific functions. Their design emphasizes efficiency and stability rather than versatility.

- Personal Computer: Built for communication, content creation, and daily tasks, personal computers offer accessibility and convenience but are not intended for large-scale analytical workloads.

- Digital Computers: Most modern systems fall into this category, relying on binary logic to process information with speed and consistency.

- Analog and hybrid computers: Though less common today, these systems still appear in specialized scientific and control environments where continuous data representation is beneficial.

Within this entire classification, the supercomputer stands apart. It is built specifically for problems that cannot be efficiently distributed across conventional machines, focusing on scale, precision, and sustained computational performance rather than general usability.

Conclusion

Throughout this discussion, it becomes clear that advanced computing systems serve very different roles depending on their design goals. While everyday machines support productivity and communication, large-scale scientific platforms exist to answer questions far beyond routine computation.

A supercomputer represents the extreme end of this spectrum. Its strength lies in processing enormous volumes of data, running complex simulations, and supporting research that shapes modern science. At the same time, these systems come with clear limitations, including cost, energy demands, and limited accessibility.

Despite these challenges, their value remains undeniable. From climate modeling to medical research, such systems continue to enable insights that would otherwise remain unreachable. As global challenges grow more complex, the need for this level of computational capability remains as relevant as ever.

FAQs About Supercomputer

What defines a supercomputer?

A supercomputer is defined by its ability to perform calculations at an extremely high scale using thousands or millions of processing cores working simultaneously. Its classification depends on performance capability rather than physical size or appearance.

What is in a super computer?

These systems typically contain large numbers of processors, extensive memory, high-speed networking, and specialized cooling infrastructure. All components are designed to operate together under continuous heavy workloads.

How much RAM does a supercomputer have?

Memory capacity can range from hundreds of terabytes to several petabytes, depending on the system’s purpose and design. Large memory pools allow complex simulations to run without constant data transfers.

How powerful is a smartphone vs. a supercomputer?

A smartphone is optimized for efficiency and mobility, while a supercomputer is designed for sustained calculation at massive scale. Even thousands of smartphones combined cannot match the coordinated processing power of such systems.

Which country has the best supercomputer?

Leadership changes over time, but countries such as the United States, Japan, China, and several European nations consistently operate the world’s most advanced systems through national research programs.